In the previous article we got to know what Kubernetes Scheduler, how it works and how to override the default behavior to control pod placement across Nodes using labels and selectors, here is the link for the first article

Controlling Kubernetes Pod Placement: Taints & Toleration – Overriding Default Scheduling (Part 1)

So let’s jump on a different method Taints and Toleration

What are Taints and Toleration

A taint is a property of a node that prevents pods from being scheduled onto it!

Taints are applied to nodes to indicate that they are not suitable for all pods, so taint acts as an exclusion mechanism unless the pod have a matching toleration.

A toleration is a property of a pod that allows it to be scheduled onto nodes with matching taints, so you inform the Kubernetes scheduler that the pod can tolerate the specified taints, thus allowing it to be scheduled on those nodes.

Real world use case

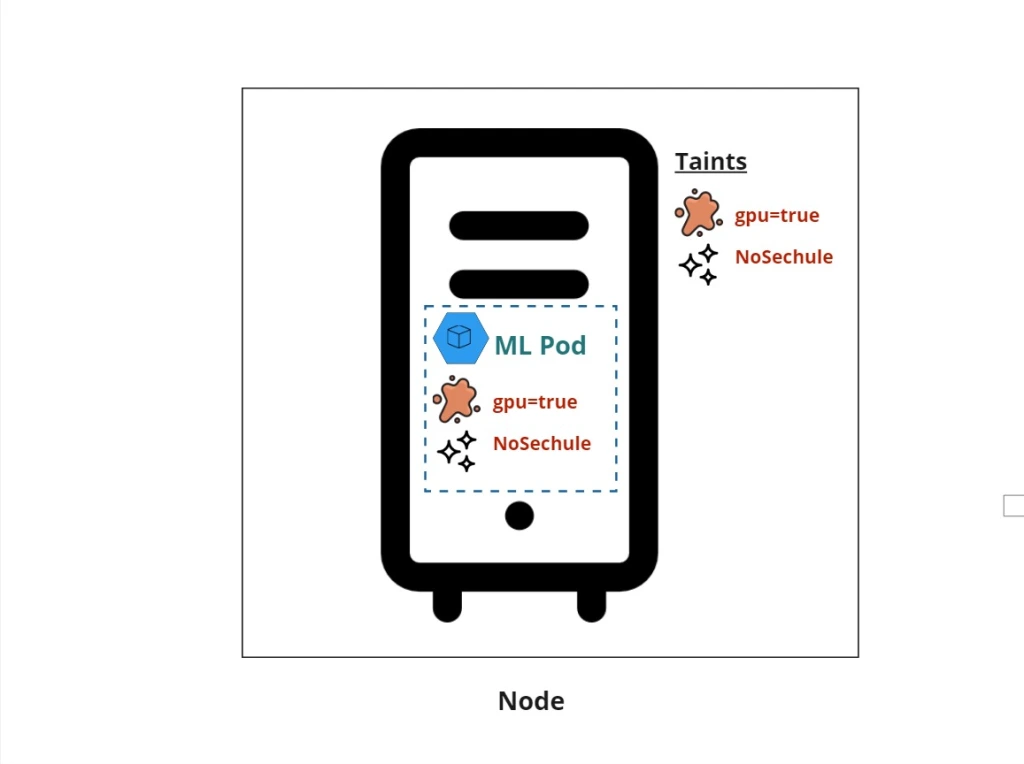

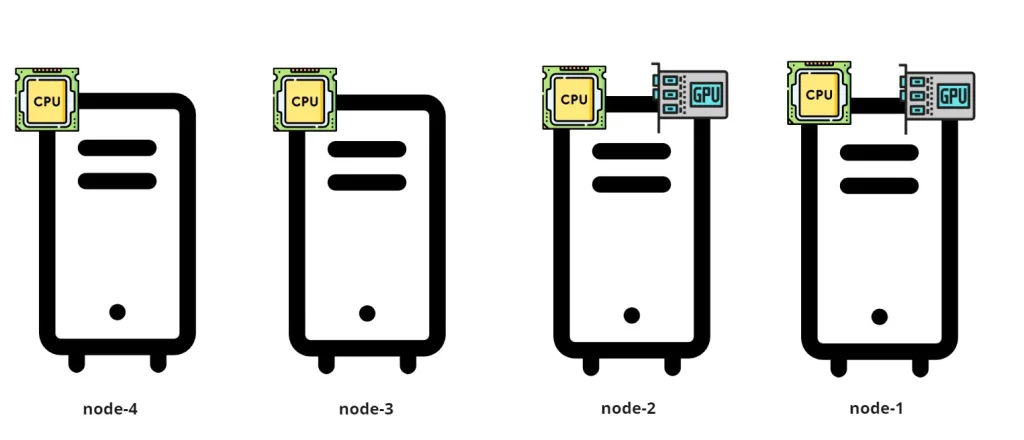

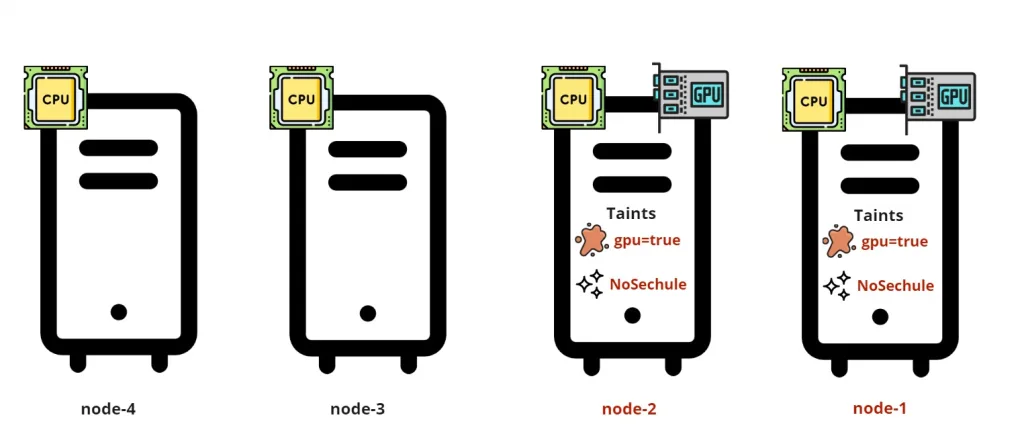

Lets have a real world example, we have dedicated GPU nodes “Graphics Processing Unit” that are specifically optimized for running machine learning training jobs.

You want to ensure that only certain workloads (e.g., machine learning training jobs) are scheduled on the GPU nodes gpu-node1 and gpu-node2 in above image to maximize resource utilization and prevent non-GPU workloads from using those resources.

Lets use this use case while walking through taints and tolerations implementation and see how it works.

Taints Implementation

Taints are a key/value pairs along with effect. The key is a string to identity the taint it and it doesn’t require value, so value is an optional string which associated with the key.

Effect specifies what happens to pods that do not tolerate the taint. It is an enum value with three possible effects:

- NoSchedule:

If a node has this taint, no Pods will be scheduled on it unless they have a matching toleration.

So you have invited to a meeting so you can join, otherwise you can’t! - PreferNoSchedule:

The scheduler will try to avoid placing Pods on nodes with this taint if there are other options available, but it may still do so if no suitable alternatives exist!

Preferably the pod will not scheduled in that tainted Node but if scheduler couldn’t find a Node to place then this Node with that option is available to place the pod on! - NoExecute:

If a node has this taint, any Pods already running on that node without a matching toleration will be evicted (removed), and new Pods without the toleration cannot be scheduled there.

NoExecute is like no entry on that meeting for non invited people and existing people inside without invitations shall leave 😀

Apply Taints

You taint a node imperatively using kubectl as below

kubectl taint nodes <Name of the Node> <Taint Key> = <Optional Taint Value >: <Taint Effect>Lets apply that in our use case, so we taint the the detected GPU nodes using taint key “gpu” and value “true” with effect NoScedule

kubectl taint nodes gpu-node1 gpu=true:NoSchedule

kubectl taint nodes gpu-node2 gpu=true:NoSchedule

And preferably you could taint declaratively in Node definition file “ex: node-taints.yaml” as below and then use kubectl apply

apiVersion: v1

kind: Node

metadata:

name: <node-name>

spec:

taints:

- key: "gpu"

value: "true"

effect: "NoSchedule"kubectl apply -f node-taints.yamlImportant Note: If you’re trying to add taints to an existing node, Kubernetes doesn’t allow you to modify the

Nodeobject directly with taints in this way. Instead, you should use the imperative way usingkubectl taintcommand

List Taints

You can use kubectl get node and format the output of the command to catch the taints section only instead of full details using JSONPath (query JSON structure) as below

kubectl get node <node-name> -o jsonpath='{.spec.taints}'And you can play around kubectl get nodes to list all nodes with taints with the same way of output formatting as below

kubectl get nodes -o=jsonpath='{range .items[*]}{.metadata.name}{" : "}{.spec.taints}{"\n"}{end}'{range .items[*]}: Iterates over each node in the list.{.metadata.name}: Retrieves the name of the node.{" : "}: Adds a colon and space after the node name for readability.{range .spec.taints[*]}: Iterates over each taint applied to the node.{.key}{"="}{.value}{" ("}{.effect}{" )"}: Formats the taint output askey=value (effect)for clarity.

the output will be formatted like that

node1 : gpu=true (NoSchedule)

node2 : dedicated=highmem (NoExecute)

node3 : gpu=false (PreferNoSchedule)Toleration Implementation

To apply toleration on the pod we add a tolerations section in pod definition file in under spec section.

We specify the taint key with two alternative operators ” Equal or Exists ” to define how the toleration matches the taint on a node, and we also specify effect again in toleration! but why ?

When you add a toleration to a Pod, you also specify an effect. This is used to explicitly match the Pod’s toleration with the taint on the node.

If the toleration’s effect matches the taint’s effect, the Pod is allowed to be scheduled on the node with that taint.

apiVersion: v1

kind: Pod

metadata:

name: ml-training-job

spec:

template:

spec:

tolerations:

- key: <Taint Key>

operator: <Equal> <Exists>

value: <Taint Value>

effect: <Taint Effect>Applying toleration

This matching mechanism between taints and tolerations in effect allows different Pods to have different levels of tolerance for taints on nodes, enabling more control over pod placement based on your needs.

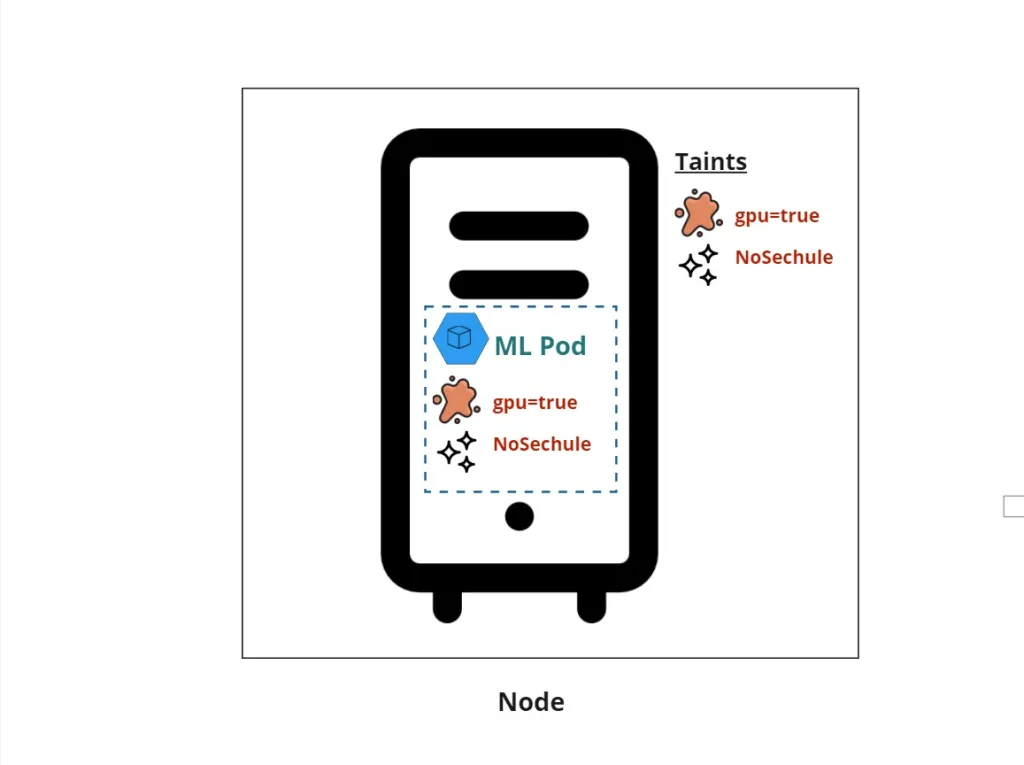

Applying that on our use case

apiVersion: v1

kind: Pod

metadata:

name: ml-training-job

spec:

template:

spec:

tolerations:

- key: "gpu"

operator: "Equal"

value: "true"

effect: "NoSchedule"

containers:

- name: ml-training-container

image: my-ml-image:latestBehavior and Result

- When this pod is submitted, Kubernetes checks the tolerations. Since it has a toleration for the

gpu=true:NoScheduletaint, the job can be scheduled on eithergpu-node1orgpu-node2. - Any other pods that do not have this toleration (like general web service pods or database pods) will not be scheduled on the GPU nodes, thus reserving those resources specifically for ML workloads.

List Toleration

Using the same way of JSONPath you can use kubectl get pod and extract only the tolerations without all the extra details of pod as below

kubectl get pod <pod-name> -o=jsonpath='{.spec.tolerations}'and the output will looks like that

[{"key":"gpu","value":"true","effect":"NoSchedule"}]Cons of Taints and Tolerations

- Complexity in Management:

Managing taints and tolerations can add complexity to your Kubernetes environment. As the number of nodes and workloads grows, keeping track of which taints are applied to which nodes and what tolerations are required for which Pods can become exhausting task. - Potential for Misconfiguration:

Incorrectly applying taints or tolerations can lead to unintended scheduling behavior. For example, if a node is tainted incorrectly, it might prevent essential pods from being scheduled, leading to service disruptions. - Increased Scheduling Time:

When using complex taint and toleration configurations, the scheduling process might take longer. - Inflexibility with Pod Behavior:

Once a taint is applied, Pods without the necessary tolerations cannot be scheduled on the node. This inflexibility can lead to resource underutilization if Pods cannot tolerate the taint and thus cannot be scheduled, even if resources are available.

Next

Now we have two methods to use and perhaps combine but based on the cons of each one we still didn’t cover some pod placement controls therefore in the next article we will get to know the Node Selector & Node Affinity and how to use Taints and Tolerations combined with Node Affinity for controlling pod placement.

- Controlling Kubernetes Pod Placement: Labels and Selectors – Overriding Default Scheduling (Part 1)

- Controlling Kubernetes Pod Placement: Taints & Toleration – Overriding Default Scheduling (Part 2)

- Controlling Kubernetes Pod Placement: Node Selector & Node Affinity – Overriding Default Scheduling (Part 3)

- Controlling Kubernetes Pod Placement: Requirements & Limits and Daemon Sets – Overriding Default Scheduling (Part 4)