Introduction

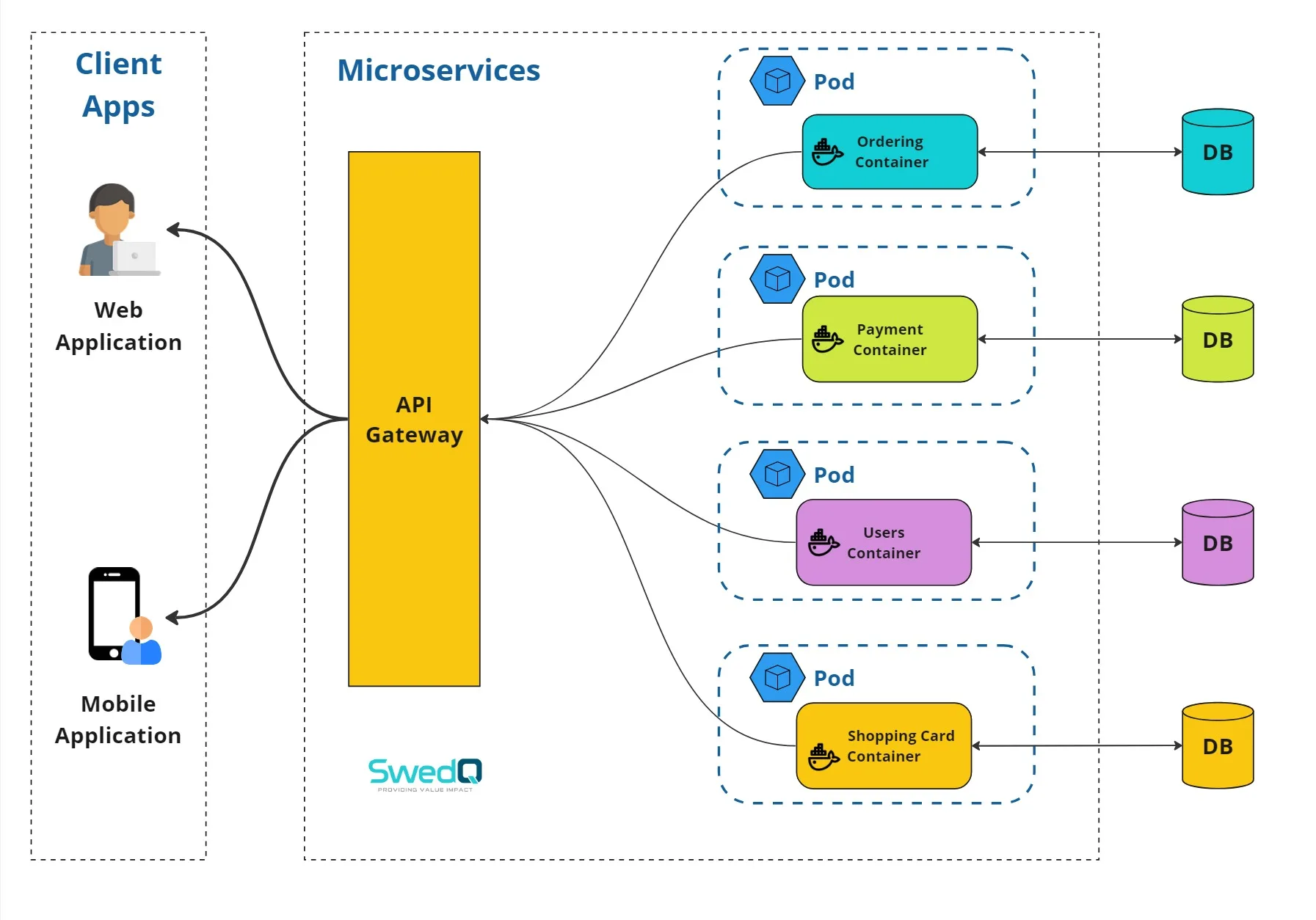

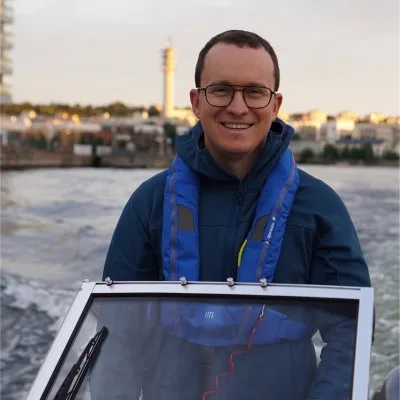

Suppose you have a microservice application where each microservice is deployed as a container and according to single responsibility principle each microservice is responsible about one aspect of the application’s functionality for example you have an E-Commerce web application which consists of Ordering microservice, Payment microservice, Users microservice, and shopping card microservice.

One of the cross-cutting layers in your application is logging and monitoring therefore you need to ensure collecting logs and metrices from each microservice or from each container.

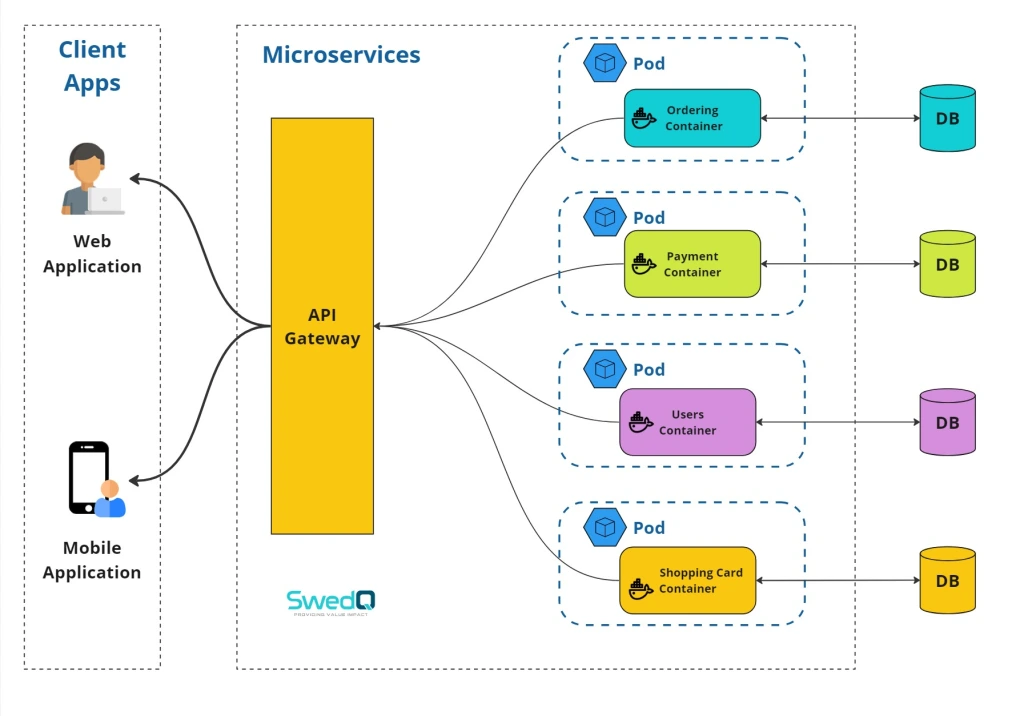

Proposed solution using multi container pod

So one of the proposed solutions is to create multi-container pod where the main microservice container run alongside with a logging/monitoring container within the same pod.

You might know about Daemon Set where Kubernetes schedular forcing placement of a specific pod in each node in the cluster and you could use that to place logging and monitoring agent pods across cluster nodes ( one per each ).

But agents run as a pod may have access to more detailed Node level logging on the other hand in this article we are talking about tightly coupled logging and monitoring with the application on the application level where logging and monitoring container in that sense need to be scaled with the application itself.

Patterns of multi container pod

Giving a brief of the three patterns of multi container pods, but in this article we will focus on implementing sidecar pattern.

We will cover adapter and ambassador patterns in other articles in this blog.

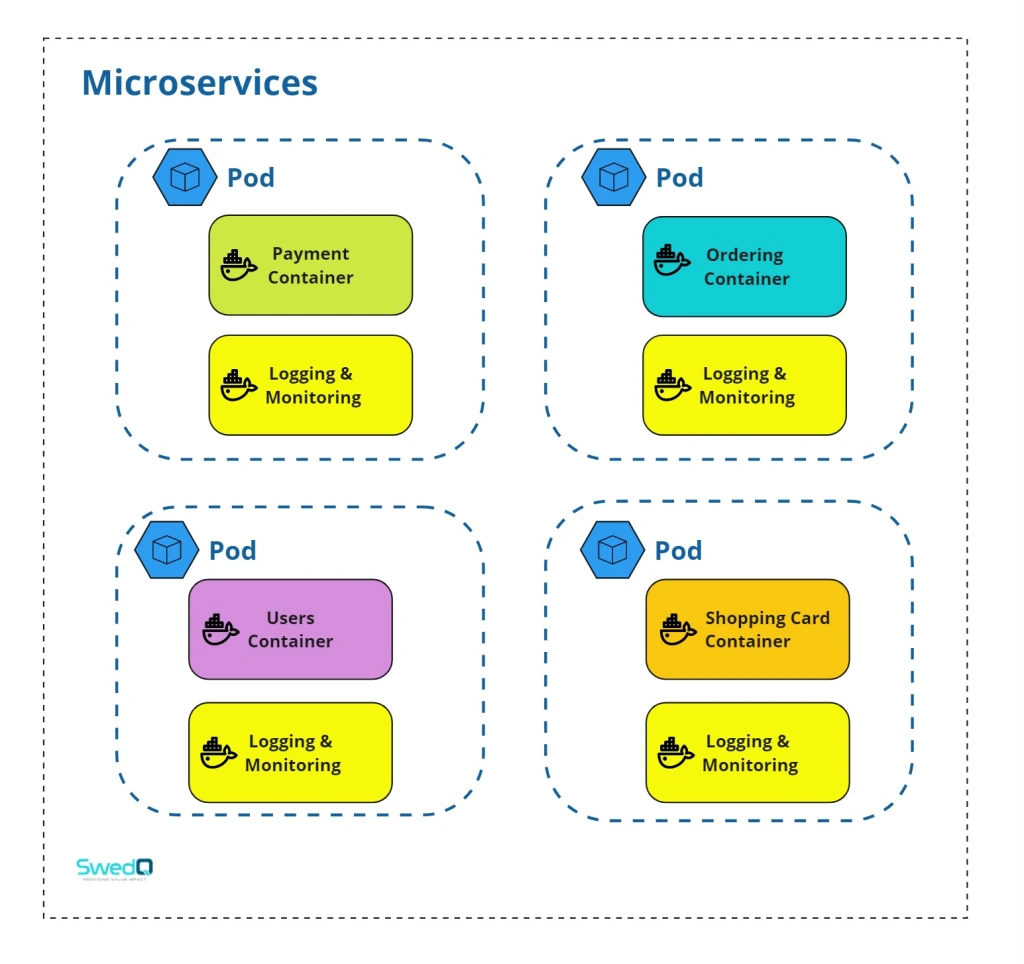

Sidecar pattern

The idea of sidecar pattern is to extend the main container functionality by adding complementary container (sidecar) which operating simultaneously with the main container and utilizing the same resources.

Adapter Pattern

Adapter container is responsible about facilitate the communication between the main container and an external service with different interfaces and protocols.

In our example logging and monitoring could be an external cloud solution and the main responsibility of your adapter container is to communicate and send logs from your main container to that cloud external service interface then receive and transform the rederived metrices to conform to a standardized format in your application.

In nutshell it adapts interfaces of your application containers to interfaces of external service for logging and monitoring, so transformation of data is the primary concern.

Ambassador Pattern

Similar to the adapter container the ambassador container is responsible about facilitate the communication between the main container and the external services, transformation might occur as well as adapter pattern but the main primary here is abstracting the details of the communication with external service which is logging and monitoring services in our example.

Lets jump to sidecar pattern!

Sidecar pattern

Communication using localhost network interface

Containers in the same pod share the same network namespace so the main container can communicate to sidecar container using localhost.

Note: The main container and sidecar container must not listen to the same port because one single process can listen to a specific port within the same network namespace.

Defining multi container pod

You might noticed that the containers section in pod definition file is an array, so you could simply add the logging/monitoring sidecar container alongside with the main container.

In below code sample we add logging and monitoring container with payment microservice container and expose each one in a different port.

You can notice the bold command line in payment-service container where we send a message from the main to sidecar container to test the connectivity using nc utility tool “stands for Netcat”. The tool is to read/write to network connection “thanks to ChatGpt 😀 “

nc localhost 9090 is used to send a message to sidecar container.

nc -l -p 9090 is used to listen for incoming connections on port 9090.

apiVersion: v1

kind: Pod

metadata:

name: payment-service-pod

spec:

containers:

- name: payment-service

image: payment-service-image

ports:

- containerPort: 8080

command: ["/bin/sh", "-c", "echo 'Hello from payment service container' | nc localhost 9090"]

- name: logging-and-monitoring

image: logging-and-service-image

ports:

- containerPort: 9090

command: ["/bin/sh", "-c", "nc -l -p 9090"]Then apply the pod definition to your cluster and check the logs for each container to see the communication between them.

Kubectl apply -f payment-service-pod.yaml

kubectl logs payment-service-pod -c payment-service

kubectl logs payment-service-pod -c logging-and-monitoringThe output for both logs commands from both containers

Hello from payment service container

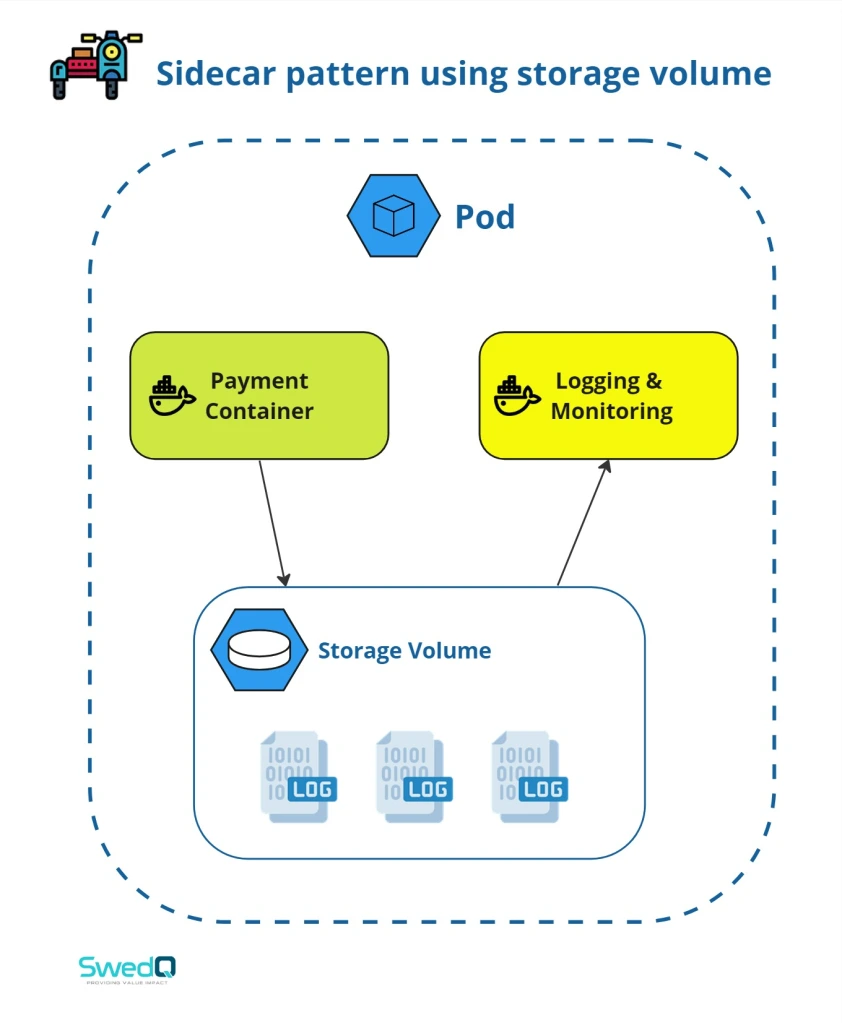

Communicating using shared volume storage

You could use a shared volume storage between both containers, so you a volume created when the pod is created and all containers within the same pod can read/write to that shared volume.

Defining multi container pod using shared storage volume

In below pod definition file you could notice the changes in bold to define volumes, mount to volumes from both container, and write/print the messages between containers in that volume, so we:

- define a volume named shared-volume of type emptyDir to the pod

- both containers mount the same shared volume.

- payment service container writes a message “Hello from payment service container” to a file named “message”

- the logging and monitoring container reads from the “message” file.

apiVersion: v1

kind: Pod

metadata:

name: payment-service-pod

spec:

volumes:

- name: shared-volume

emptyDir: {}

containers:

- name: payment-service

image: payment-service-image

ports:

- containerPort: 8080

volumeMounts:

- name: shared-volume

mountPath: /shared-data

command: ["/bin/sh", "-c", "echo 'Hello from payment service container' > /shared-data/message"]

- name: logging-and-monitoring

image: logging-and-service-image

ports:

- containerPort: 9090

volumeMounts:

- name: shared-volume

mountPath: /shared-data

command: ["/bin/sh", "-c", "cat /shared-data/message"]Main advantages of using sidecar pattern

- Separation of concern: by separating complementary functionality into separate containers

- Scalability and reusability: Sidecar containers can be reused across multiple applications or pods, and you can scale sidecar container independently of the main application (check resource requests & resource limits for containers within the pod definition file https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/)

- Easy deployment: sidecar container deployed alongside with the main container and they share the same configuration file and can be easily configured using the same pod configuration.

- Enhance the observability: in the example which we used in this article we could enhance the observability by providing metrices and logs complying with application standard.

In summary it’s important to check weather the sidecar pattern is suitable for your application requirements taking into consideration the cons of using sidecar pattern.

Thanks for reading!